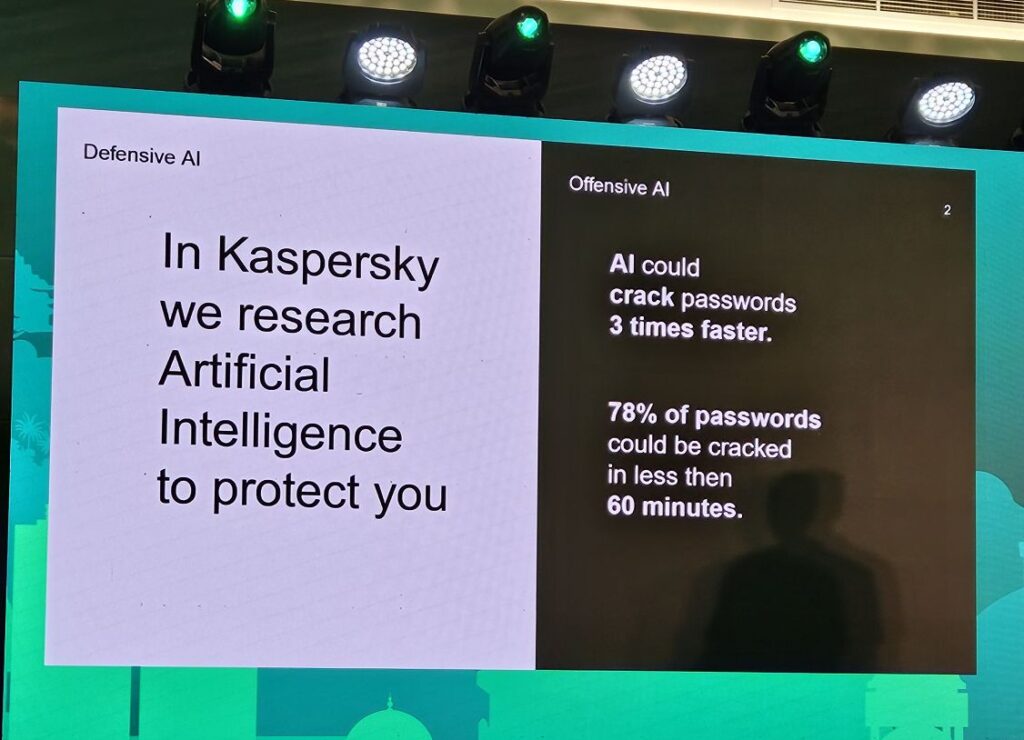

Kaspersky Labs reveals cybercriminals leveraging offensive AI in cyberattacks; 78% of passwords crackable in less than 1 hour

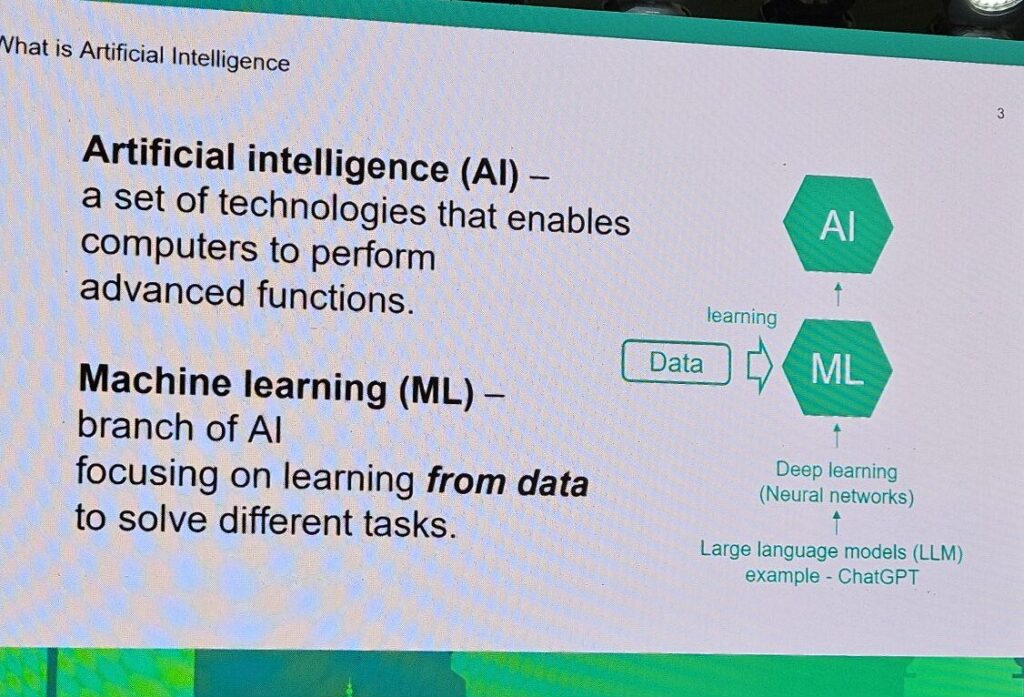

The recent Kaspersky Cybersecurity Weekend 2024 summit reveals that cybercriminals and threat actors are employing a host of offensive AI systems that are publicly available as well as other AI systems to assist in more sophisticated cyberattacks with greater chances of attacks.

Offensive AI possibilities and applications

According to Kaspersky, AI can be used to automate attacks, speed up routines and execute more complex operations to achieve objectives with AI employed to create more convincing phishing attacks.

Demonstrated examples of offensive AI applications include the use of ChatGPT to create malware and automating attacks on users, AI programs that can act as keyloggers to capture passwords and data, swarm intelligences that can operate botnets to restore malware even after a successful clean-up and more.

One of the most powerful aspects of generative AI is that it can be used to create convincing, human-looking content and threat actors using LLMs like ChatGPT-4o can create sophisticated phishing messages that sound like actual individuals while deepfake videos can fake the appearance of actual people in video calls with one particularly famous attack happening in Hong Kong where scammers mimicked a senior company executive authorising a fraudulent transfer of US$25 million.

Kaspersky Labs also conducted research on using AI for password cracking with most passwords stored in an encrypted fashion. While reverse engineering passwords is typically a challenging affair, a recently leaked password compilation was published online in July 2024 with 10 billion lines and 8.2 billion unique passwords has made the job a whole lot easier for threat actors.

Alexey Antonov, Lead Data Scientist at Kaspersky briefing about offensive AI threats at the recent Cyber Security Weekend 2024 in Sri Lanka

“We analysed this massive data leak and found that 32% of user passwords are not strong enough and can be reverted from encrypted hash form using a simple brute-force algorithm and a modern NVIDIA RTX 4090 GPU in less than 60 minutes.” said Alexey Antonov, Lead Data Scientist at Kaspersky.

He added, “We also trained a language model on the password database and tried to check passwords with the obtained AI method. We found that 78% of passwords could be cracked this way, which is about three times faster than using a brute-force algorithm. Only 7% of those passwords are strong enough to resist a long-term attack.”

Kaspersky also leverages AI for defensive purposes, using AI models to detect threats and research AI vulnerabilities to protect against future threats. For more details on offensive AI, check out www.kaspersky.com